Hi, machine is Lenovo m90P SFF desktop i7 cpu with 4 GiB ddr3 memory. I am struggling with the creation of a persistent GhostBSD bootable usb medium . I have a 64 GiB 'Verbatim Store N Go' usb medium here (using another of same type medium for installer). I have been attempting to install GhostBSD onto the medium. I have tried 'Full disk configuration' and also 'Custom (Advanced Partitioning)' schemes with no success. The installer will start, it gets further with custom scheme it seems. Then it abruptly quits with no notice or message, message finally appeared see further down.

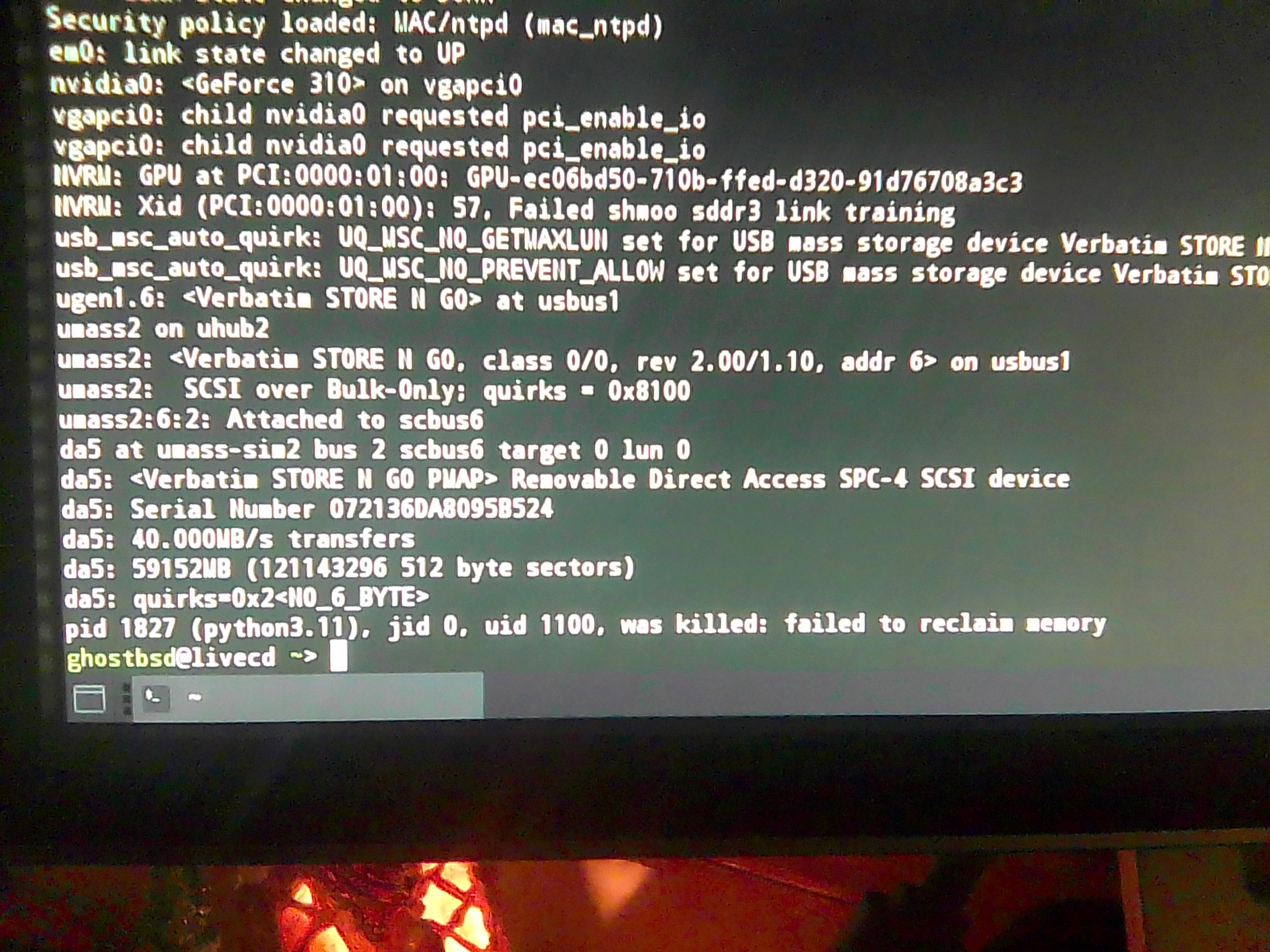

Opening a Mate terminal within the live installer then passing dmesg I see the following (nearing end of output):

What are your thoughts about this, and also does line output:

pid 1827 (python3.11). jid 0, uid 1100, was killed: failed to reclaim memory

Provide any further insight into this issue?

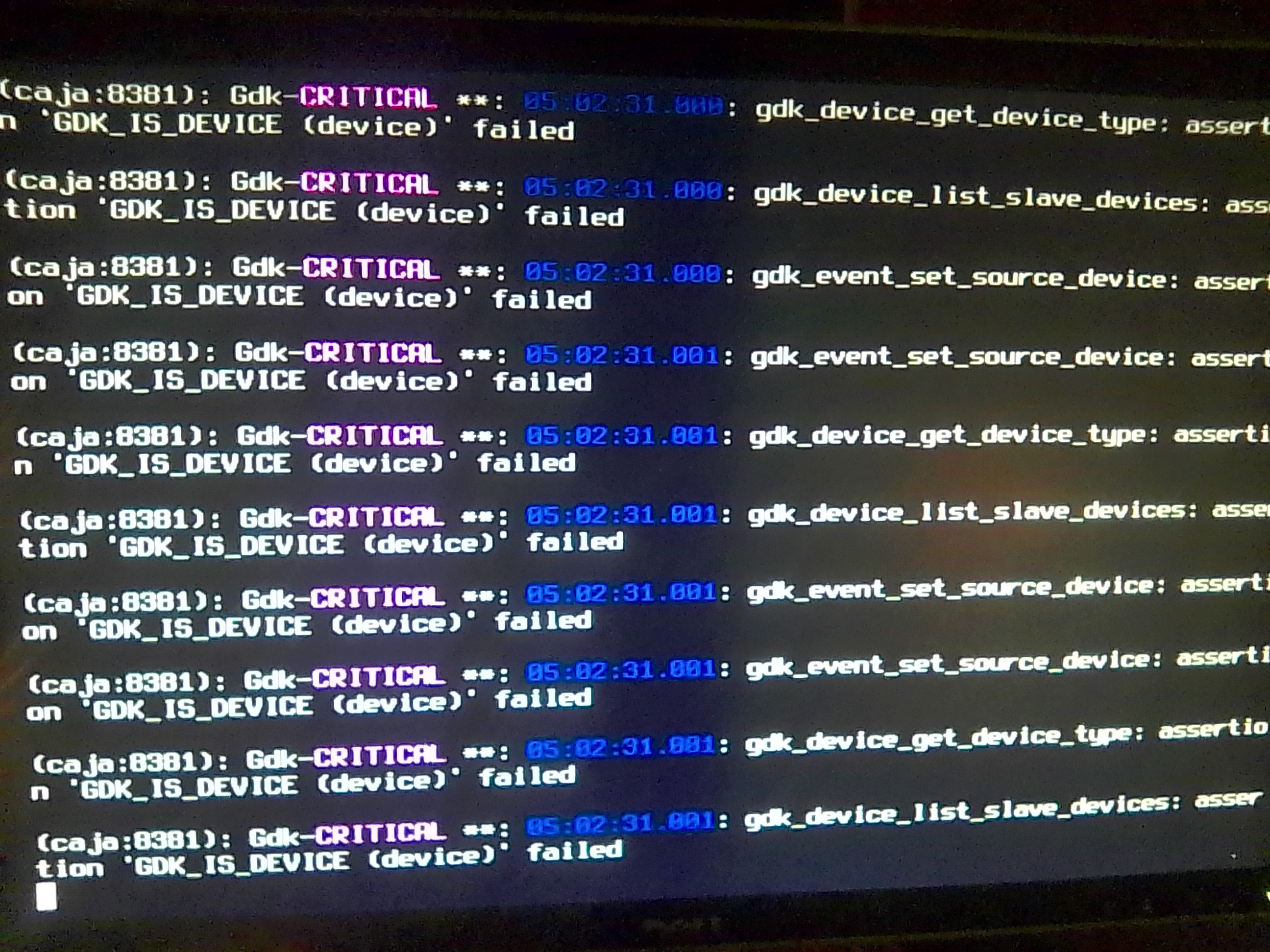

Update: I just retried again and finally able to see a graphical failure message:

I have attempted to open the link displayed on the error message and it seems the machine is somewhat locked at this time. ...machine just exited installer and screen displays the following:

I waited a bit and was then able to ctrl+c to shell prompt (maybe could have sooner).

Then I was able to upload the log to termbin: $ nc termbin.com 9999 < pc-sysinstall.log

once in the /tmp/.pc-sysinstall directory. https://termbin.com/s9ft

Also I have added the log below: :

kern.geom.debugflags: 16 -> 16

kern.geom.label.disk\_ident.enable: 0 -> 0

Deleting all gparts

Running: gpart destroy -F /dev/da5

gpart: Device busy

Clearing gpt backup table location on disk

Running: dd if=/dev/zero of=/dev/da5 bs=1m count=5

5+0 records in

5+0 records out

5242880 bytes transferred in 0.247124 secs (21215548 bytes/sec)

Running: dd if=/dev/zero of=/dev/da5 bs=1m oseek=59147

dd: /dev/da5: end of device

6+0 records in

5+0 records out

5242880 bytes transferred in 0.350251 secs (14968920 bytes/sec)

Running gpart on /dev/da5

Running: gpart create -s GPT -f active /dev/da5

gpart: geom 'da5': File exists

Running: gpart add -a 4k -s 512 -t freebsd-boot /dev/da5

da5p3 added

Running: zpool labelclear -f /dev/da5p1

failed to clear label for /dev/da5p1

Stamping boot sector on /dev/da5

Running: gpart bootcode -b /boot/pmbr /dev/da5

bootcode written to da5

Running: gpart add -a 4k -s 58639M -t freebsd-zfs /dev/da5

gpart: autofill: No space left on device

Running: zpool labelclear -f

missing vdev name

usage:

labelclear \[-f\] <vdev>

Running: gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 /dev/da5

partcode written to da5p1

bootcode written to da5

NEWFS: /dev/da5p2 - ZFS

EXITERROR: The poolname: zroot is already in use locally!

ZFS Unmount: zroot/var/tmp

Running: zfs unmount zroot/var/tmp

ZFS Unmount: zroot/var/mail

Running: zfs unmount zroot/var/mail

ZFS Unmount: zroot/var/log

Running: zfs unmount zroot/var/log

ZFS Unmount: zroot/var/crash

Running: zfs unmount zroot/var/crash

ZFS Unmount: zroot/var/audit

Running: zfs unmount zroot/var/audit

ZFS Unmount: zroot/usr/ports

Running: zfs unmount zroot/usr/ports

ZFS Unmount: zroot/tmp

Running: zfs unmount zroot/tmp

ZFS Unmount: zroot/home

Running: zfs unmount zroot/home

Running: zfs unmount zroot/ROOT/default

Unmounting: /mnt

Running: umount -f /mnt

umount: /mnt: not a file system root directory

I hope this gives more insight on troubleshooting the problem I am having to create a persistent GhostBSD usb medium.

-Regards

: